NIC2001 presentation (no slides included)

NIC2001 presentation (no slides included) |

![]()

NIC2001 Abstract:

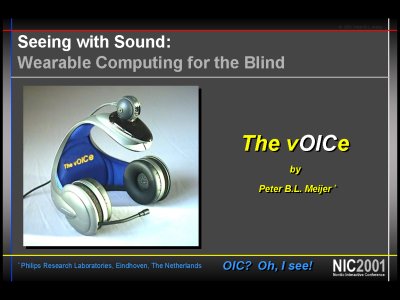

Seeing with Sound: Wearable Computing for the BlindThe slides used in this presentation are available as a PDF file

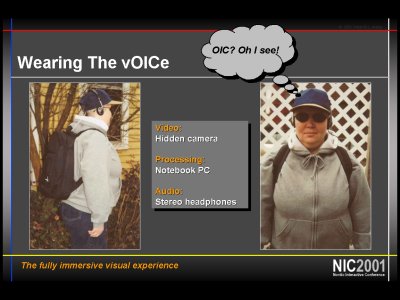

By Peter B.L. MeijerBlind people obviously cannot see, but usually they can hear! Personal multimedia computing nowadays allows us to convert or synthesize audio and video streams in real-time. Moreover, it is technically feasible to convert video into audio while preserving a significant amount of visual information in the resulting - highly complex - sounds. So if blind people could learn to mentally reconstruct the visual content carried by these sounds, then they might be able to "see" with sound.

Imagine a blind person wearing a small hidden camera, a wearable computer and stereo headphones, while listening to the camera views as they are being converted live into corresponding sounds.Thus a blind person will immersively experience his or her visual environment.

This presentation will discuss and demonstrate this technology as it is available today, known as "The vOICe", and discuss user reports as well as links with neuroscience (cross-modal plasticity), philosophy ("is it vision?") and psychology.

More information is available at http://www.seeingwithsound.com

Shortly after hearing the NIC2001 presentation on the web, one of the late-blinded users of The vOICe responded concerning the unanswered philosophical and neuroscientific question of whether seeing-with-sound is hearing or vision, saying

"One thing which struck me about Peter's presentation. Was the question is the soundscape being related as sound input or visual input. Since I am now so comfortable with the information provided by the program I did not stop to think of this question. Sure the soundscapes are sound but it creates a different sort of input for my mind. The sound of music or a voice is just that sound. yet the soundscapes generate sight. The sound information seems to enter my ears and is processed between my ear section of my head. The soundscape information is placed forward from my left temple across my eyes to my right temple. They are two distinct separate areas of consciousness. this may seem strange. for sound to generate two different types of input. I can not explain it. I just am aware it is true."

See also the web pages on the invited presentations at SIGGRAPH 98, VSPA 2001 and Tucson 2002, and the web page detailing the latest eye-tracking support in relation to non-invasive bionic brain interfaces (not the invasive brain implants) for The vOICe auditory display technology.