« The vOICe for Android

« The vOICe for Windows

![]() « The vOICe Home Page

« The vOICe Home Page

« The vOICe for Android

« The vOICe for Windows

|

Attainable "visual" acuity with The vOICe was studied in the paper

|

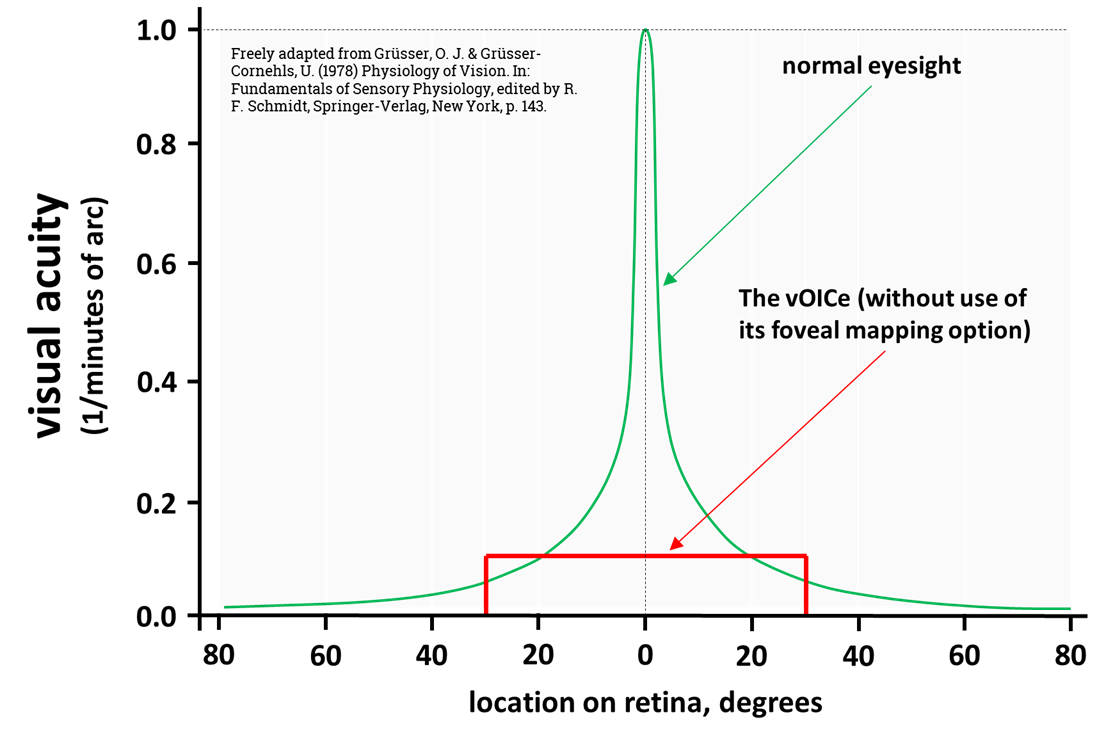

In considering visual acuity as currently provided by The vOICe for Android in combination with a smartphone or smart glasses, or The vOICe for Windows in combination with a PC camera (webcam) or USB camera glasses, it makes sense to try and compare to common established measures for human visual acuity. These measures are characterised by numbers such as "20/20" for people having normal vision and "20/200" for people on the verge of legal blindness using best corrective measures for their best eye (using glasses, contact lenses or other optics, not surgical procedures such as LASIK eye surgery).

|

|

In measuring the visual acuity of normal human vision, the eyes move around all the

time (and can hardly be prevented from doing so). And yet we cannot permit the same

in measuring visual acuity of a vision substitution system. If we did, it would imply

that a one-pixel display would suffice in tracing out any details, which obviously

defeats the goal of any useful vision substitution system with a decent resolution.

In other words, in measuring visual acuity of a vision substitution device, it should

in principle not be allowed to manually sweep the camera. Still, even if we adhere to

that like we do on this page, this leaves open the question of how to properly define

visual acuity with systems such as The vOICe that have a built-in automatic sweeping

mechanism (e.g., left to right scanning) central to their cross-modal mapping approach.

|

|

Note: in the definition of legal blindness one also considers someone with normal visual acuity but having a field of view less than 20 degrees to be legally blind (through tunnel vision in this case).As stated above, normal visual acuity is characterized with numbers such as 20/20, and a legally blind person has a visual acuity of less than 20/200 even with best corrective measures. These numbers mean approximately that someone on the verge of legal blindness can with best possible glasses see details at a distance of 20 feet that someone with normal vision can still discern at 200 feet: a tenfold difference. A more precise technical definition states that normal visual acuity is the ability to resolve high contrast black letters on a white background that subtend 5 minutes of arc. There are 60 minutes of arc in one degree of arc. It is also important to realize that on average one needs between 2 and 3 pixels horizontally to get identifiable letters in a single image.

Note: by comparing subsequent images sampled at small displacements, even a single pixel could in principle be enough through scanning around a letter, so we will not allow this, again in order to obtain meaningful figures. We will however allow for negative video because that gives best auditory contrast with The vOICe for small black shapes on a uniform white background. Otherwise, one would hear mostly the background rather than the small shapes.

With the above assumption of a field of view of 60 degrees, say 2 to 3 pixels wide letters and with a typical horizontal resolution of 176 pixels for the PC camera input, we find that every pixel subtends some 0.35 degrees in width, and the smallest discernable letter thus spans about one degree for 3 pixels horizontally (3*60/176=~1), or 0.7 degree for 2 pixels (2*60/176). These figures mean that visual acuity is between 8 and 12 times less than with normal human vision, so it would be characterised by a visual acuity in the range 20/160 to 20/240, which would be around the 20/200 that defines the verge of legal blindness.

Note: the foveal mapping option of The vOICe was not considered here. The enlargement of the central part of the view gives a higher acuity, but the foveal mapping option is mostly meant for use in combination with a wide-angle lens, such that the gain in acuity is then partially cancelled out by a wider field of view of, say, 120 or 180 degrees.

As an illustration, we can listen to the sound of a sequence of 12 letters, each letter being 3 "voicels" (pixels) wide and 5 voicels high. The 12 letters are arranged in three groups of four identical letters, using the capitals "C", "H" and "T". So we have from left to right the string "C C C C H H H H T T T T" in small white print on a black background, or black print on a white background when allowing for and applying inverse video. The entire view is 176 voicels wide as is representative for the view of a PC camera. Now try and listen to the two-second sound sample (33K MP3 file) to see if you can discern any differences between the three groups of four letters. If this is too easy for you, try the faster one-second sound sample (18K MP3 file).

Make sure that your media player runs in autorepeat mode such that you can listen to each soundscape a number of times. With Microsoft Windows Media Player 9 and 10 you can toggle the repeat mode via Control T or via the menu Play | Repeat. With the older Microsoft Windows Media Player 6.4 you can go to the menu View | Options, select the Playback tab and then select "Repeat forever".

Note: The vOICe auditory display is not at all intended for reading printed text. For that purpose, OCR (optical character recognition) in combination with a speech synthesizer is in general far more convenient for the blind user. The use of text in the above examples is only meant to demonstrate visual acuity and perception of detail achievable through an auditory display, with envisioned applications only where no easier alternatives are available.Of course there are many but's and if's to making these comparisons, because these are for the most part best possible figures, using inverse video to get small high contrast white letters on a black background, without accounting for the somewhat lower default vertical acuity, and without accounting for auditory masking and the much lower "frame rate" as compared to normal vision (default one frame per second versus some effective fifteen frames per second in natural human vision). Still, with for instance the Dobelle brain implant claiming a visual acuity of 20/400, and that only in a very narrow field of view with few "pixels" (phosphenes), the auditory display seems to compare favourably. The same applies to the tongue display unit (TDU) of Bach-y-Rita et al (see also the

Other types of acuity measurements may be based on the so-called vernier acuity tests. The image below shows three vertical lines for which the bottom halfs are displaced by zero or one pixel.

After clicking this image to play a two-second MP3 sample, or by downloading and importing this image file into The vOICe (Control O) and switching to half speed (F3), you should readily notice the temporal displacements, here giving rise to very fast pitch sweeps. At normal scan speed this may at first be near your threshold of perception. Feedback like this may also play a role in perceiving loss of balance.

As an aside, we may note that sighted people do not need character-level resolution to read text, and can recognize words from their "word image", or overal word shape. In the image below, a bitmap for the two-word phrase "word image" was downscaled to a width of 40 pixels, while still leaving the phrase readable.

We may therefore make the rough estimate that visual reading requires about 20 pixels per word width. With The vOICe by default sounding 176 image slices per second, each one pixel wide, this would be equivalent to about 9 words per second. This happens to be very similar to the (high) speech rates that blind people set their screen reader to: rates up to some 600 words per minute are used, or 10 words per second.

Finally, we may note that another popular visual acuity test consists of the so-called

Landolt C rings, which are circles with a single gap along their circumference.

The Landolt rings are all identical except for a rotation over multiples of 45 degrees.

Their shapes are therefore normally considered more neutral than letters with respect to

the apparent visual acuity for different rings. However, since frequency and time make for

two qualitatively different axes for representing images with The vOICe auditory display,

rotational invariance of the thresholds for perception is not as strong as in visual

perception with the eyes. The gap in the Landolt rings is more easily perceived when it is

at the top or bottom of the ring than when it is on the left or right. To experience this,

you may download the inverse video animated GIF image of the Landolt C

rings and next import this animation into The vOICe for Windows

software via its File menu, or by pressing Control O to get to its file requester. The

animation starts with the Landolt C having the gap on the right, and subsequent image frames

have the Landolt C rotated clockwise by 45 degree increments, and each time alternated with

an image frame of a full circle (without any gap). You will likely find that having the gaps on

the left or on the right is significantly more difficult to perceive than having the gap at

any of the other positions. However, after a while you may start noticing the spectral gaps as

compared to the full circles that do not have these gaps. Nevertheless, this example warns us

that in considering vision substitution approaches we must remain very careful about possible

implicit assumptions in applying established visual acuity tests that were so far developed for

use with sighted people. Further systematic vision substitution and prosthetic vision

experiments may be based on the use of Gabor functions in various orientations (and after

turning off The vOICe's automatic contrast enhancement via function key F6, to avoid boosting

visual artefacts that the sighted cannot see either).

the apparent visual acuity for different rings. However, since frequency and time make for

two qualitatively different axes for representing images with The vOICe auditory display,

rotational invariance of the thresholds for perception is not as strong as in visual

perception with the eyes. The gap in the Landolt rings is more easily perceived when it is

at the top or bottom of the ring than when it is on the left or right. To experience this,

you may download the inverse video animated GIF image of the Landolt C

rings and next import this animation into The vOICe for Windows

software via its File menu, or by pressing Control O to get to its file requester. The

animation starts with the Landolt C having the gap on the right, and subsequent image frames

have the Landolt C rotated clockwise by 45 degree increments, and each time alternated with

an image frame of a full circle (without any gap). You will likely find that having the gaps on

the left or on the right is significantly more difficult to perceive than having the gap at

any of the other positions. However, after a while you may start noticing the spectral gaps as

compared to the full circles that do not have these gaps. Nevertheless, this example warns us

that in considering vision substitution approaches we must remain very careful about possible

implicit assumptions in applying established visual acuity tests that were so far developed for

use with sighted people. Further systematic vision substitution and prosthetic vision

experiments may be based on the use of Gabor functions in various orientations (and after

turning off The vOICe's automatic contrast enhancement via function key F6, to avoid boosting

visual artefacts that the sighted cannot see either).

References:

W. H. Dobelle, ``Artificial Vision for the Blind by Connecting a Television Camera

to the Visual Cortex,'' ASAIO Journal (American Society for Artificial

Internal Organs), January - February 2000.

A. Haigh, D. J. Brown, P. Meijer and M. J. Proulx, ``How well do you see what you hear? The acuity of

visual-to-auditory sensory substitution,’’ Frontiers in Cognitive Science, Vol. 4, June 2013. Available

on-line.

P. B. L. Meijer, ``An Experimental System for Auditory Image Representations,''

IEEE Transactions on Biomedical Engineering, Vol. 39, No. 2,

pp. 112-121, Feb 1992. Reprinted in the 1993 IMIA Yearbook of Medical

Informatics, pp. 291-300. Electronic version of full paper available

on-line.

A. Nau, M. Bach and C. Fisher, ``Clinical Tests of Ultra-Low Vision Used to Evaluate Rudimentary

Visual Perceptions Enabled by the BrainPort Vision Device,'' Trans. Vis. Sci. Tech.,

Vol. 2, No. 3., June 2013. Available

E. Peli, ``Testing vision is not testing for vision,''

Translational Vision Science & Technology, Vol.9, No. 13, article 32, December 2020. Available

E. Sampaio, S. Maris and P. Bach-y-Rita,

``Brain plasticity: `visual' acuity of blind persons via the tongue,''

Brain Research, Vol. 908, No. 2, pp. 204-207, July 2001.

Note: when using the visual acuity calculations as presented on this page, the 12 × 12

electrode tongue display would have an acuity on the order of 20 / 2400, and not nearly 20 / 860 or better

as reported in the Brain Research article. This is because definitions and procedures for measuring the

visual acuity of vision substitution devices play such a decisive role here, and at the moment no standards

exist that fully cover the methodological pitfalls as described on this page.

M. Ziat, O. Gapenne, C. Lenay and J. Stewart, ``Perception limits of a sensory substitution

device by using an acuity test,'' Proc. Human Machine iNteraction Conference (Human’07),

Timimoun, Algeria, March 12-14, 2007, pp. 201-206. Available

D.-R. Chebat, C. Rainville, R. Kupers and M. Ptito,

``Tactile-'visual' acuity of the tongue in early blind individuals,''

NeuroReport, Vol. 18, No. 18, pp. 1901-1904, December 3, 2007.

L. Fu, S. Cai, H. Zhang, G. Hu and X. Zhang, ``Psychophysics of reading with a limited

number of pixels: Towards the rehabilitation of reading ability with visual prosthesis,''

Vision Research, Vol. 46, No. 8-9, pp. 1292-301, 2006.

L. Fu, S. Cai, H. Zhang, G. Hu and X. Zhang, ``Psychophysics of reading with a limited

number of pixels: Towards the rehabilitation of reading ability with visual prosthesis,''

Vision Research, Vol. 46, No. 8-9, pp. 1292-301, 2006.

Abstract -

Sensory substitution devices (SSDs) aim to compensate for the loss of a sensory modality, typically vision,

by converting information from the lost modality into stimuli in a remaining modality. “The vOICe” is a

visual-to-auditory SSD which encodes images taken by a camera worn by the user into “soundscapes” such that

experienced users can extract information about their surroundings. Here we investigated how much detail

was resolvable during the early induction stages by testing the acuity of blindfolded sighted, naïve vOICe

users. Initial performance was well above chance. Participants who took the test twice as a form of minimal

training showed a marked improvement on the second test. Acuity was slightly but not significantly impaired

when participants wore a camera and judged letter orientations “live”. A positive correlation was found

between participants' musical training and their acuity. The relationship between auditory expertise via

musical training and the lack of a relationship with visual imagery, suggests that early use of a SSD draws

primarily on the mechanisms of the sensory modality being used rather than the one being substituted. If

vision is lost, audition represents the sensory channel of highest bandwidth of those remaining. The level

of acuity found here, and the fact it was achieved with very little experience in sensory substitution by

naïve users is promising.

Abstract - This paper presents an experimental system for the conversion of images into sound patterns. The system was designed to

provide auditory image representations within some of the known limitations of the human hearing system, possibly as a step towards

the development of a vision substitution device for the blind. The application of an invertible (1-to-1) image-to-sound mapping ensures

the preservation of visual information.

The system implementation involves a pipelined special purpose computer connected to a standard television camera. The

time-multiplexed sound representations, resulting from a real-time image-to-sound conversion, represent images up to a resolution of

64 × 64 pixels with 16 grey-tones per pixel. A novel design and the use of standard components have made for a low-cost portable

prototype conversion system having a power dissipation suitable for battery operation.

Computerized sampling of the system output and subsequent calculation of the approximate inverse (sound-to-image) mapping provided

the first convincing experimental evidence for the preservation of visual information in the sound representations of complicated images.

However, the actual resolution obtainable with human perception of these sound representations remains to be evaluated.

![]() on-line.

on-line.

Abstract - Purpose: We evaluated whether existing ultra-low vision tests are suitable

for measuring outcomes using sensory substitution. The BrainPort is a vision assist device coupling

a live video feed with an electrotactile tongue display, allowing a user to gain information about

their surroundings. Methods: We enrolled 30 adult subjects (age range 22–74) divided into two groups.

Our blind group included 24 subjects (n = 16 males and n = 8 females, average age 50)

with light perception or worse vision. Our control group consisted of six subjects (n = 3 males,

n = 3 females, average age 43) with healthy ocular status. All subjects performed 11

computer-based psychophysical tests from three programs: Basic Assessment of Light Motion, Basic

Assessment of Grating Acuity, and the Freiburg Vision Test as well as a modified Tangent Screen.

Assessments were performed at baseline and again using the BrainPort after 15 hours of training.

Results: Most tests could be used with the BrainPort. Mean success scores increased for all of our

tests except contrast sensitivity. Increases were statistically significant for tests of light

perception (8.27 ± 3.95 SE), time resolution (61.4% ± 3.14 SE), light localization (44.57% ± 3.58 SE),

grating orientation (70.27% ± 4.64 SE), and white Tumbling E on a black background (2.49 logMAR ± 0.39 SE).

Motion tests were limited by BrainPort resolution. Conclusions: Tactile-based sensory substitution

devices are amenable to psychophysical assessments of vision, even though traditional visual pathways

are circumvented. Translational Relevance: This study is one of many that will need to be undertaken

to achieve a common outcomes infrastructure for the field of artificial vision.

![]() on-line.

on-line.

Abstract - The 'visual' acuity of blind persons perceiving information through a newly developed human-machine interface,

with an array of electrical stimulators on the tongue, has been quantified using a standard Ophthalmological test

(Snellen Tumbling E).

Acuity without training averaged 20/860. This doubled with 9 h of training. The interface may

lead to practical devices for persons with sensory loss such as blindness, and offers a means of exploring late brain

plasticity.

![]() on-line (PDF file).

on-line (PDF file).

|

|

|

Paul Bach-y-Rita (1934-2006)