« The vOICe for Windows

![]() « The vOICe Home Page

« The vOICe Home Page

« The vOICe for Windows

There is an affordable 3D webcam on the market: the

There is an affordable 3D webcam on the market: the

|

This page discusses how The vOICe technology supports binocular vision with suitable stereoscopic camera hardware. In orientation and mobility applications for blind users, the orientation component is supported well by the standard single camera setup, but the mobility component can benefit from better depth and distance perception in order to detect nearby objects and obstacles. Binocular vision makes this possible, and will thus further enhance the applicability and versatility of The vOICe as an ETA (Electronic Travel Aid) for the blind in addition to its general vision substitution and synthetic vision features.

In designing an electronic travel aid (ETA) for the blind, a key advantage of a sonar device over a camera used to be that it is relatively easy with sonar to measure distances to nearby obstacles, allowing one to generate a warning signal when there is a collision threat. In using a single camera this turns out to be extremely hard, because the distance information in a static camera view is essentially ambigious and requires much a priori knowledge about the physical world to derive distances from recognized objects. This is what people blinded in only one eye do all the time, without apparent effort, but for machine vision, the required recognition of objects remains a daunting task. One partial solution is to derive size and distance information from video sequences of a single camera while moving around (this is what people blind in one eye do as well), but a more powerful and reliable method is to make use of binocular vision, also called stereoscopic vision or stereopsis. By comparing the slight differences in images obtained from two different simultaneous viewpoints, the distances to nearby objects and obstacles can be estimated. This approach also works with static scenes. Moreover, by now knowing distances and apparent (angular) sizes in the camera view, the user can deduce the actual sizes of nearby objects without first having to recognize them.

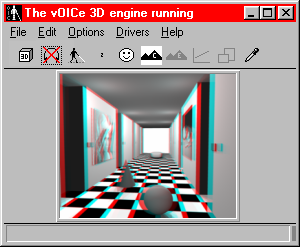

3D anaglyph for sighted viewing with red/green glasses |

The following example illustrates how a binocular view with stereo images from a visually highly cluttered scene gets processed by The vOICe into a distance map where brighter (louder) means closer. The nearby tree trunk clearly stands out in the resulting distance map, as are some parts of the parked car that is right behind the tree, whereas the clutter of the visually complex distant background is completely suppressed. The sky and distant houses, other parked cars and trees are rendered invisible in favour of nearby objects and obstacles. The 18K MP3 audio sample shows the corresponding soundscape for the extracted distance map.

Red color component of greyscale left-eye image. |

Cyan color component of greyscale right-eye image. |

Combined red-cyan 3D anaglyph. |

The vOICe distance map as extracted from anaglyph image. |

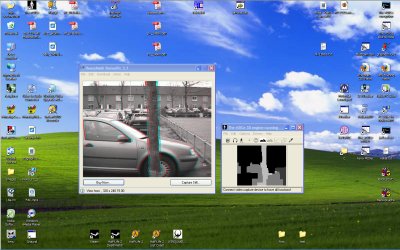

Registered users can load the anaglyph image into The vOICe for Windows after switching to the Stereoscopic View mode via the menu Options | 3D to get a distance map derived in real-time from the disparities in this anaglyph view. When experimenting with other anaglyphs, note that the color filters may have to be different, and more importantly, that only anaglyphs derived from greyscale views will give good results due to the required strict separation of left and right view.

Various stereoscopic vision options can be set via the menu Edit | Stereoscopic Preferences.

Among the radio button mapping options is also the possibility to sound the left eye image to the left ear and the right eye image to the right ear (or the other way around by swapping the color filter selections for left and right eye views, that is, sound the left eye image to the right ear and the right eye image to the left ear). When the camera views are properly calibrated to have coinciding views for large distances, the soundscapes will be the same as without stereo vision for and distant items and landmarks, and differences will arise only from visual disparity at close range. A key advantage over sounding a depth map is that distant landmarks - important for orientation - are not discarded, but a disadvantage is that visual disparity will be less salient than when sounding the corresponding depth map. The following example illustrates for a view from the Avatar 3D science fiction movie how the disparity of nearby objects causes a subtle but noticeable stronger spatialization in the corresponding anaglyph soundscape. It is not yet known if blind people can learn to exploit this.

|

Non-anaglyph soundscape. |

3D anaglyph soundscape. |

Can you hear differences between the regular non-anaglyph soundscape and the 3D anaglyph soundscape?

![]()

Home-made setup using HeavyMath Cam 3D driver or Minoru 3D webcam

An easy and cheap way to quickly hack together very basic stereo vision support

for The vOICe is to use two identical webcams with a WDM driver (most modern

webcams should qualify). The third-party program

An easy and cheap way to quickly hack together very basic stereo vision support

for The vOICe is to use two identical webcams with a WDM driver (most modern

webcams should qualify). The third-party program

![]() HeavyMath Cam 3D

lets you capture from the two webcams and show live anaglyph video on the screen.

Alternatively, you can use the

HeavyMath Cam 3D

lets you capture from the two webcams and show live anaglyph video on the screen.

Alternatively, you can use the

![]() Minoru 3D webcam,

in combination with Microsoft AMCAP to show the anaglyph

view on the screen. The Minoru 3D webcam already contains two identical webcams mounted

in a convenient rigid frame. In addition, you run the registered version of The vOICe,

and you turn on the active window client sonification mode via Control F9. Next you Alt tab

to the HeavyMath Cam 3D, Minoru 3D or AMCAP window to capture and sound the live anaglyph

view with The vOICe. Next

you switch The vOICe to its stereo vision mode via the menu Options | 3D | Stereoscopic View,

and depending on the current settings in the menu Edit | Stereoscopic Preferences,

The vOICe will use the anaglyph screen view to calculate and sound a live

depth map or sound the left camera view to the left ear and the right camera

view to the right ear. Moreover, blind users can perform horizontal and vertical

camera calibration of the two views independently, by selecting the option for

sounding the difference between left-eye view and right-eye view, and then

adjusting (mis)alignment until all distant visual items vanish from the view,

indicating a perfect match between left and right view for large distances.

A limitation with the current procedure is that you will also see/hear the

window borders and menu of the anaglyph window, but this can be alleviated

by setting a relatively high capture resolution such as VGA. You will also typically

need to adjust some parameter settings in The vOICe, such as for disparity, to

obtain acceptable results. Of course with two separate webcams you also need to

improvise some stable fixture to mount and adjust the two webcams such that their

views coincide at infinity. Finally, always first start the anaglyph viewing

software and only then The vOICe, such that The vOICe will not connect to (and

thereby block) one of the two webcams that the anaglyph viewing software needs

to connect to.

Minoru 3D webcam,

in combination with Microsoft AMCAP to show the anaglyph

view on the screen. The Minoru 3D webcam already contains two identical webcams mounted

in a convenient rigid frame. In addition, you run the registered version of The vOICe,

and you turn on the active window client sonification mode via Control F9. Next you Alt tab

to the HeavyMath Cam 3D, Minoru 3D or AMCAP window to capture and sound the live anaglyph

view with The vOICe. Next

you switch The vOICe to its stereo vision mode via the menu Options | 3D | Stereoscopic View,

and depending on the current settings in the menu Edit | Stereoscopic Preferences,

The vOICe will use the anaglyph screen view to calculate and sound a live

depth map or sound the left camera view to the left ear and the right camera

view to the right ear. Moreover, blind users can perform horizontal and vertical

camera calibration of the two views independently, by selecting the option for

sounding the difference between left-eye view and right-eye view, and then

adjusting (mis)alignment until all distant visual items vanish from the view,

indicating a perfect match between left and right view for large distances.

A limitation with the current procedure is that you will also see/hear the

window borders and menu of the anaglyph window, but this can be alleviated

by setting a relatively high capture resolution such as VGA. You will also typically

need to adjust some parameter settings in The vOICe, such as for disparity, to

obtain acceptable results. Of course with two separate webcams you also need to

improvise some stable fixture to mount and adjust the two webcams such that their

views coincide at infinity. Finally, always first start the anaglyph viewing

software and only then The vOICe, such that The vOICe will not connect to (and

thereby block) one of the two webcams that the anaglyph viewing software needs

to connect to.

Home-made setup using Microsoft Kinect

If you have any program that shows a live Kinect depth map on the computer screen, you can again

make use of The vOICe active window client sonification mode via Control F9, and Alt tab to the

window that shows the Kinect depth map. Thus it is very easy to create an auditory display version

of the

![]() Kinect for the Blind

project that won second place in the Russian finals of the Microsoft Imagine Cup 2011. In this

case you do not need the stereo vision features of The vOICe because the Kinect device and its

driver directly gives a real-time 3D depth map. Unfortunately, the Kinect thus far fails in

typical outdoor lighting conditions where sunshine overwhelms its projected infrared dot patterns,

and the Kinect is also too bulky for unobtrusive head-mounted use. Indoor use of The vOICe in

combination with the Kinect was demonstrated in 2015 by Giles Hamilton-Fletcher and Jamie Ward

of the University of Sussex in the BBC television program BBC Click

(

Kinect for the Blind

project that won second place in the Russian finals of the Microsoft Imagine Cup 2011. In this

case you do not need the stereo vision features of The vOICe because the Kinect device and its

driver directly gives a real-time 3D depth map. Unfortunately, the Kinect thus far fails in

typical outdoor lighting conditions where sunshine overwhelms its projected infrared dot patterns,

and the Kinect is also too bulky for unobtrusive head-mounted use. Indoor use of The vOICe in

combination with the Kinect was demonstrated in 2015 by Giles Hamilton-Fletcher and Jamie Ward

of the University of Sussex in the BBC television program BBC Click

(![]() YouTube).

The vOICe soundscapes of Kinect depth maps let blind people "see" nearby objects and their shapes,

including for instance the pose of a person.

YouTube).

The vOICe soundscapes of Kinect depth maps let blind people "see" nearby objects and their shapes,

including for instance the pose of a person.

![]()

Stereo vision hardware

With the exception of the Minoru 3D Webcam, there are no suitable and affordable

stereo video cameras on

the market yet. However, a physicist or electronics engineer should be able to design and

construct a dedicated stereo camera ("3D camera") setup by combining some standard commercially

available components. One could use two black-and-white (greyscale) cameras to have greyscale

video directly. The image on the left shows the simplified schematics that are obtained when

using greyscale cameras. The greyscale video signal from the left-eye camera could be used as

the "red" (R) signal for the RGB input of a video capture card while the greyscale video signal

from the right-eye camera could form the "green" (G) or "cyan" (G+B) signal for this same RGB input.

Note that the two cameras need to be "genlocked" to have synchronized video signals

that can be captured as separate video signals and then merged into one color signal.

The use of genlock requires at least one of the cameras to offer a synchronization input.

Without genlock support in the cameras, the capture card must have proper provisions for

video frame synchronization.

the market yet. However, a physicist or electronics engineer should be able to design and

construct a dedicated stereo camera ("3D camera") setup by combining some standard commercially

available components. One could use two black-and-white (greyscale) cameras to have greyscale

video directly. The image on the left shows the simplified schematics that are obtained when

using greyscale cameras. The greyscale video signal from the left-eye camera could be used as

the "red" (R) signal for the RGB input of a video capture card while the greyscale video signal

from the right-eye camera could form the "green" (G) or "cyan" (G+B) signal for this same RGB input.

Note that the two cameras need to be "genlocked" to have synchronized video signals

that can be captured as separate video signals and then merged into one color signal.

The use of genlock requires at least one of the cameras to offer a synchronization input.

Without genlock support in the cameras, the capture card must have proper provisions for

video frame synchronization.

Vendors that can offer an end-user hardware solution for anaglyph video generation for The vOICe are welcome to report, for possible inclusion in the third-party suppliers page.

Remarks

As an alternative for using black-and-white cameras,

one could also use two genlocked color cameras, in which case one should first mix the

RGB signals from each color camera into greyscale video, because we need greyscale-based

anaglyphs for good results. This is shown schematically in the image on the left.

The image on the right again stresses that picking and capturing individual color

components directly is not a good idea: that would for instance render a bright red

object on a black background invisible in the right-eye view, while in reality its

brightness should still make it stand out - as needed for making a distance map

from the left-right viewing disparity! Next, the greyscale video signal from the left-eye

camera could be used as the "red" (R) signal for the RGB input of a regular video capture

card while the greyscale video signal from the right-eye camera could form the "green" (G)

or "cyan" (G+B) signal for this same RGB input.

one could also use two genlocked color cameras, in which case one should first mix the

RGB signals from each color camera into greyscale video, because we need greyscale-based

anaglyphs for good results. This is shown schematically in the image on the left.

The image on the right again stresses that picking and capturing individual color

components directly is not a good idea: that would for instance render a bright red

object on a black background invisible in the right-eye view, while in reality its

brightness should still make it stand out - as needed for making a distance map

from the left-right viewing disparity! Next, the greyscale video signal from the left-eye

camera could be used as the "red" (R) signal for the RGB input of a regular video capture

card while the greyscale video signal from the right-eye camera could form the "green" (G)

or "cyan" (G+B) signal for this same RGB input.

The vOICe's advanced stereo vision functionality has so far undergone only limited testing and good results under all circumstances cannot be guaranteed. Especially in mobile applications, one has to carefully consider the possible safety hazards caused by any depth mapping artefacts and inaccuracies. Step-downs in particular will remain hard to detect reliably, while it can be clearly be dangerous when any nearby or fast-approaching objects go entirely undetected ("time-to-impact" is often a more relevant measure than actual physical distance, and is applied in The vOICe's monocular "collision threat analysis" option). On the other hand, some depth mapping artefacts and inaccuracies may be tolerable. For instance, (small) parts of nearby objects may appear to be at a larger distance as long as some parts of these nearby objects still get their correct nearby depth reading. "False alarms" where (even small) parts of distant objects appear to be at close range are more disturbing. Any receding objects may even be deliberately filtered out as they would not normally present a safety hazard, while a reduction of clutter could reduce the mental load for the blind user.

Left and right camera images must be carefully calibrated, such that they coincide at infinity (parallel camera model). Lacking that, the machine equivalent of the medical condition of "strabismus" (eye misalignment) may yield poor stereo vision results.Related work

Companies offering dedicated stereo vision cameras include

In the future, The vOICe for Android may support sounding depth maps generated by

Google Tango or Intel RealSense based smartphones or augmented reality glasses.

Related work on using binocular vision input for blind people has been done by

Phil Picton and Michael Capp of Nene College, Northampton, UK, as described in their paper

![]() Point Grey Research (FireFly, ptgrey.com),

Point Grey Research (FireFly, ptgrey.com),

![]() Focus Robotics (nDepth, focusrobotics.com) and

Focus Robotics (nDepth, focusrobotics.com) and

![]() Videre Design (DCAM, videredesign.com),

and companies for time-of-flight (TOF) based image sensors and cameras include(d)

Videre Design (DCAM, videredesign.com),

and companies for time-of-flight (TOF) based image sensors and cameras include(d)

![]() SwissRanger/MESA/CSEM (swissranger.ch/mesa-imaging.ch,

originating from Swiss Center for Electronics and Microtechnology, Inc). The underlying principle of time-of-flight cameras is also

known as LIDAR (LIght Detection And Ranging).

Companies may wish to team up with The vOICe project to showcase their 3D camera products and concepts.

Note that The vOICe for Windows does not support Firewire (IEEE-1394),

because it requires Video for Windows compliance.

SwissRanger/MESA/CSEM (swissranger.ch/mesa-imaging.ch,

originating from Swiss Center for Electronics and Microtechnology, Inc). The underlying principle of time-of-flight cameras is also

known as LIDAR (LIght Detection And Ranging).

Companies may wish to team up with The vOICe project to showcase their 3D camera products and concepts.

Note that The vOICe for Windows does not support Firewire (IEEE-1394),

because it requires Video for Windows compliance.![]() ``The optophone: an electronic blind aid'' (abstract).

Another project using binocular vision input to create an auditory display for the blind

is the

``The optophone: an electronic blind aid'' (abstract).

Another project using binocular vision input to create an auditory display for the blind

is the

![]() Virtual Acoustic Space

(Espacio Acústico Virtual, or EAV) project

of the Institute of Astrophysics of the Canary Islands (IAC) and the

University of La Laguna, Tenerife, Spain.

Stephen Se and Michael Brady published an article titled

``

Virtual Acoustic Space

(Espacio Acústico Virtual, or EAV) project

of the Institute of Astrophysics of the Canary Islands (IAC) and the

University of La Laguna, Tenerife, Spain.

Stephen Se and Michael Brady published an article titled

``![]() ``A Stereo-vision System for the Visually Impaired,''

(Technical Report 2000-41x-1, School of Engineering, University of Guelph), S. Areibi and J. Zelek,

``A Smart Reconfigurable Visual System for the Blind",''

Conf. Smart Systems and Devices (SSD), Hammamet, Tunisia, March 27-30, 2001,

and J. Zelek, D. Bullock, S. Bromley and Haisheng Wu,

``

``A Stereo-vision System for the Visually Impaired,''

(Technical Report 2000-41x-1, School of Engineering, University of Guelph), S. Areibi and J. Zelek,

``A Smart Reconfigurable Visual System for the Blind",''

Conf. Smart Systems and Devices (SSD), Hammamet, Tunisia, March 27-30, 2001,

and J. Zelek, D. Bullock, S. Bromley and Haisheng Wu,

``

Yoshihiro Kawai et al. from Tsukuba Electrotechnical Laboratory and Tsukuba College of Technology in Japan

worked on a stereo vision system for the blind using a 3D spatial audio display, as

described in Y. Kawai, M. Kobayashi, H. Minagawa, M. Miyakawa and F. Tomita,

Yoshihiro Kawai et al. from Tsukuba Electrotechnical Laboratory and Tsukuba College of Technology in Japan

worked on a stereo vision system for the blind using a 3D spatial audio display, as

described in Y. Kawai, M. Kobayashi, H. Minagawa, M. Miyakawa and F. Tomita,

![]() ``A Support

System for Visually Impaired Persons Using Three-Dimensional Virtual Sound,'' Int. Conf.

Computers Helping People with Special Needs (ICCHP 2000), pp. 327-334, Karslruhe, Germany, July 17-21, 2000,

Y. Kawai, F. Tomita,

``A Support

System for Visually Impaired Persons Using Three-Dimensional Virtual Sound,'' Int. Conf.

Computers Helping People with Special Needs (ICCHP 2000), pp. 327-334, Karslruhe, Germany, July 17-21, 2000,

Y. Kawai, F. Tomita,

![]() ``A Visual Support

System for Visually Impaired Persons Using Acoustic Interface,''

IAPR Workshop on Machine Vision Applications (MVA 2000), pp.379-382, Tokyo, Japan, Nov. 28-30, 2000,

Y. Kawai, F. Tomita,

``A Visual Support

System for Visually Impaired Persons Using Acoustic Interface,''

IAPR Workshop on Machine Vision Applications (MVA 2000), pp.379-382, Tokyo, Japan, Nov. 28-30, 2000,

Y. Kawai, F. Tomita,

![]() ``A Support System

for Visually Impaired Persons Using Acoustic Interface - Recognition of 3-D Spatial Information,''

HCI International 2001, Vol. 1, pp. 203-207.

and Y. Kawai, F. Tomita,

``A Support System

for Visually Impaired Persons Using Acoustic Interface - Recognition of 3-D Spatial Information,''

HCI International 2001, Vol. 1, pp. 203-207.

and Y. Kawai, F. Tomita,

![]() ``A Support System

for Visually Impaired Persons to Understand Three-dimensional Visual Information Using Acoustic Interface,''

International Conference on Pattern Recognition (ICPR 2002), Vol. 3, pp. 974-977.

Xiaoye Lu and Roberto Manduchi of the Department of Computer Engineering at the University of California, Santa Cruz,

worked on a system for

``A Support System

for Visually Impaired Persons to Understand Three-dimensional Visual Information Using Acoustic Interface,''

International Conference on Pattern Recognition (ICPR 2002), Vol. 3, pp. 974-977.

Xiaoye Lu and Roberto Manduchi of the Department of Computer Engineering at the University of California, Santa Cruz,

worked on a system for

![]() ``Detection

and Localization of Curbs and Stairways Using Stereo Vision,'' International Conference on

Robotics and Automation (ICRA 2005), Barcelona, Spain, April 18-22, 2005.

Simon Meers and Koren Ward of the School of IT and Computer Science at the University of Wollongong

worked on a stereo vision system for the blind project named "ENVS" (electro-neural vision system),

``Detection

and Localization of Curbs and Stairways Using Stereo Vision,'' International Conference on

Robotics and Automation (ICRA 2005), Barcelona, Spain, April 18-22, 2005.

Simon Meers and Koren Ward of the School of IT and Computer Science at the University of Wollongong

worked on a stereo vision system for the blind project named "ENVS" (electro-neural vision system),

![]() ``A Substitute Vision

System for Providing 3D Perception and GPS Navigation via Electro-Tactile Stimulation,''

1st International Conference on Sensing Technology (ICST 2005), Palmerston North, New Zealand, November 2005.

``A Substitute Vision

System for Providing 3D Perception and GPS Navigation via Electro-Tactile Stimulation,''

1st International Conference on Sensing Technology (ICST 2005), Palmerston North, New Zealand, November 2005.

![]() Koren Ward

also applied for a patent on a tactile display

Koren Ward

also applied for a patent on a tactile display

![]() device for providing perception of the physical environment.

Gopalakrishnan Sainarayanan of the University Malaysia Sabah is working on a system called SVETA

(Stereo Vision based Electronic Travel Aid).

Yi-Zeng Hsieh of the Department of Computer Science and Information Engineering at the National Central University

of Taiwan in June 2006 completed a M.Sc. thesis titled

device for providing perception of the physical environment.

Gopalakrishnan Sainarayanan of the University Malaysia Sabah is working on a system called SVETA

(Stereo Vision based Electronic Travel Aid).

Yi-Zeng Hsieh of the Department of Computer Science and Information Engineering at the National Central University

of Taiwan in June 2006 completed a M.Sc. thesis titled

![]() ``A Stereo-Vision-Based Aid System for the Blind.''

Lise Johnson and Charles Higgins of the University of Arizona

worked on a stereo vision based system described in

``

``A Stereo-Vision-Based Aid System for the Blind.''

Lise Johnson and Charles Higgins of the University of Arizona

worked on a stereo vision based system described in

``![]() A Navigation Aid for the Blind Using Tactile-Visual Sensory Substitution,''

Proc. 28th Ann. Int. Conf. IEEE Eng. in Medicine and Biology Society (EMBC 2006),

pp. 6298-6292, New York, 2006.

Andreas Hub, Tim Hartter and Thomas Ertl of the University of Stuttgart, Germany, worked on a stereo vision based system described in

A Navigation Aid for the Blind Using Tactile-Visual Sensory Substitution,''

Proc. 28th Ann. Int. Conf. IEEE Eng. in Medicine and Biology Society (EMBC 2006),

pp. 6298-6292, New York, 2006.

Andreas Hub, Tim Hartter and Thomas Ertl of the University of Stuttgart, Germany, worked on a stereo vision based system described in

![]() ``Interactive Tracking of Movable Objects for the Blind on the Basis of Environment Models and Perception-Oriented Object Recognition Methods,''

Proc. 8th Int. ACM SIGACCESS Conf. Computers and Accessibility (ASSETS '06),

pp. 111-118, Portland, Oregon, 2006.

The European IST project

``Interactive Tracking of Movable Objects for the Blind on the Basis of Environment Models and Perception-Oriented Object Recognition Methods,''

Proc. 8th Int. ACM SIGACCESS Conf. Computers and Accessibility (ASSETS '06),

pp. 111-118, Portland, Oregon, 2006.

The European IST project

![]() CASBliP

(Cognitive Aid System for Blind People, 2006-2009) aims to apply stereo vision and time-of-flight 3D vision techniques

(UseRCams 3D sensor) for rendering enhanced images and audio maps.

In 2008, Dah-Jye Lee, Jonathan Anderson and James Archibald of Brigham Young University, USA, proposed a ``

CASBliP

(Cognitive Aid System for Blind People, 2006-2009) aims to apply stereo vision and time-of-flight 3D vision techniques

(UseRCams 3D sensor) for rendering enhanced images and audio maps.

In 2008, Dah-Jye Lee, Jonathan Anderson and James Archibald of Brigham Young University, USA, proposed a ``![]() Hardware Implementation of a Spline-Based Genetic Algorithm for Embedded Stereo Vision Sensor Providing Real-Time Visual Guidance to the Visually Impaired,''

EURASIP Journal on Advances in Signal Processing, Vol. 2008, Article ID 385827, 10 pages, 2008.

At the October 18, 2008 Workshop on Computer Vision Applications for the Visually Impaired (CVAVI 08) in Marseille, France,

Juan Manuel Sáez Martínez and Francisco Escolano Ruiz of the University of Alicante in Spain proposed

Hardware Implementation of a Spline-Based Genetic Algorithm for Embedded Stereo Vision Sensor Providing Real-Time Visual Guidance to the Visually Impaired,''

EURASIP Journal on Advances in Signal Processing, Vol. 2008, Article ID 385827, 10 pages, 2008.

At the October 18, 2008 Workshop on Computer Vision Applications for the Visually Impaired (CVAVI 08) in Marseille, France,

Juan Manuel Sáez Martínez and Francisco Escolano Ruiz of the University of Alicante in Spain proposed

![]() ``Stereo-based Aerial Obstacle Detection for the Visually Impaired.''

At the 2009 Conference and Workshop on Assistive Technologies for Vision and Hearing Impairment (CVHI 2009),

M. Bujacz et al. presented

``Stereo-based Aerial Obstacle Detection for the Visually Impaired.''

At the 2009 Conference and Workshop on Assistive Technologies for Vision and Hearing Impairment (CVHI 2009),

M. Bujacz et al. presented

![]() ``A proposed method for sonification of 3D environments.''

Vimal Mohandas and Roy Paily published a paper titled

``A proposed method for sonification of 3D environments.''

Vimal Mohandas and Roy Paily published a paper titled

![]() ``Stereo disparity estimation algorithm for blind assisting system'' in the CSI Transactions on ICT, March 2013,

Vol. 1, No. 1, pp 3-8.

``Stereo disparity estimation algorithm for blind assisting system'' in the CSI Transactions on ICT, March 2013,

Vol. 1, No. 1, pp 3-8.Copyright © 1996 - 2024 Peter B.L. Meijer