Auditory display for synthetic vision

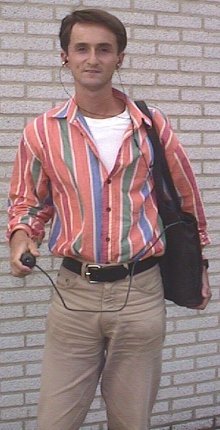

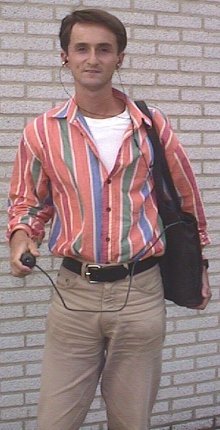

The vOICe hardware prototype

|

Originally, a hardware prototype, nick-named The vOICe

(OIC? Oh I see!), was developed to prove the technical feasibility of the underlying concepts. It starts

by subdividing an electronic photograph into 64 rows and 64 columns, giving 4096 pixels.

Shading is reduced to 16 levels of grey.

Column by column, the image is then

translated into sound. The top pixel in a column gives a high pitch, and the

bottom pixel a low pitch. An intermediate position gives an intermediate pitch.

The grey-level is expressed by loudness. All pixels in a single column are

heard simultaneously, much like a musical chord. With subsequent image columns,

these chords change according to the pixel brightness distribution within the column.

In this way, the image content is translated into sound by scanning through all

64 columns, from left to right. Finally, a click marks the beginning of a new

image. Typically, fresh electronic photographs are taken and converted into

sound at one-second intervals. Of course, there is a lot more to be said about

technical issues relating to this general image-to-sound

mapping for artificial vision.

The vOICe software

|

Very important for a good mapping is the concept of preservation

of simplicity and similarity: simple images should sound simple,

and simple shifts in position should lead to correspondingly simple

perceptual changes in the sounds. Complexity should not arise from the

mapping itself, but only from the complexity of the content

of the image being mapped into sound! Furthermore, the mapping should

not only allow one to distinguish different image sounds and

to learn to associate a given set of auditory patterns with

their visual counterparts, but also to analyze and

generalize them for situations that were not in the training

examples.

The vOICe hardware did not become available as a product. However,

in the photograph on the left, a software

version of The vOICe is being used, running on a fast Pentium notebook

PC (inside the shoulder bag) with a PC camera and headphones. This software

is available and now lets you experience The vOICe yourself

using The vOICe for Windows for

Microsoft Windows. Try it!

|